Wednesday, April 22, 2009

Different Algorithims of Face Recognition

Derived from Karhunen-Loeve's transformation. Given an s-dimensional vector representation of each face in a training set of images, Principal Component Analysis (PCA) tends to find a t-dimensional subspace whose basis vectors correspond to the maximum variance direction in the original image space. This new subspace is normally lower dimensional .If the image elements are considered as random variables, the PCA basis vectors are defined as eigenvectors of the scatter matrix.

ICA

Independent Component Analysis (ICA) minimizes both second-order and higher-order dependencies in the input data and attempts to find the basis along which the data (when projected onto them) are - statistically independent . Bartlett et al. provided two architectures of ICA for face recognition task: Architecture I - statistically independent basis images, and Architecture II - factorial code representation.

LDA

Linear Discriminant Analysis (LDA) finds the vectors in the underlying space that best discriminate among classes. For all samples of all classes the between-class scatter matrix SB and the within-class scatter matrix SW are defined. The goal is to maximize SB while minimizing SW, in other words, maximize the ratio detSB/detSW . This ratio is maximized when the column vectors of the projection matrix are the eigenvectors of (SW^-1 × SB).

EP

Aa eigenspace-based adaptive approach that searches for the best set of projection axes in order to maximize a fitness function, measuring at the same time the classification accuracy and generalization ability of the system. Because the dimension of the solution space of this problem is too big, it is solved using a specific kind of genetic algorithm called Evolutionary Pursuit (EP).

EBGM

Elastic Bunch Graph Matching (EBGM). All human faces share a similar topological structure. Faces are represented as graphs, with nodes positioned at fiducial points. (exes, nose...) and edges labeled with 2-D distance vectors. Each node contains a set of 40 complex Gabor wavelet coefficients at different scales and orientations (phase, amplitude). They are called "jets". Recognition is based on labeled graphs. A labeled graph is a set of nodes connected by edges, nodes are labeled with jets, edges are labeled with distances.

Kernel Methods

The face manifold in subspace need not be linear. Kernel methods are a generalization of linear methods. Direct non-linear manifold schemes are explored to learn this non-linear manifold.

Trace Transform

The Trace transform, a generalization of the Radon transform, is a new tool for image processing which can be used for recognizing objects under transformations, e.g. rotation, translation and scaling. To produce the Trace transform one computes a functional along tracing lines of an image. Different Trace transforms can be produced from an image using different trace functionals.

AAM

An Active Appearance Model (AAM) is an integrated statistical model which combines a model of shape variation with a model of the appearance variations in a shape-normalized frame. An AAM contains a statistical model if the shape and gray-level appearance of the object of interest which can generalize to almost any valid example. Matching to an image involves finding model parameters which minimize the difference between the image and a synthesized model example projected into the image.

3-D Morphable Model

Human face is a surface lying in the 3-D space intrinsically. Therefore the 3-D model should be better for representing faces, especially to handle facial variations, such as pose, illumination etc. Blantz et al. proposed a method based on a 3-D morphable face model that encodes shape and texture in terms of model parameters, and algorithm that recovers these parameters from a single image of a face.

3-D Face Recognition

The main novelty of this approach is the ability to compare surfaces independent of natural deformations resulting from facial expressions. First, the range image and the texture of the face are acquired. Next, the range image is preprocessed by removing certain parts such as hair, which can complicate the recognition process. Finally, a canonical form of the facial surface is computed. Such a representation is insensitive to head orientations and facial expressions, thus significantly simplifying the recognition procedure. The recognition itself is performed on the canonical surfaces.

Digital Camera Face Recognition: How It Works?

Laplace edge detection with C++ code

for(Y=0; Y<=(originalImage.rows-1); Y++) {

for(X=0; X<=(originalImage.cols-1); X++) {

SUM = 0;

/* image boundaries */

if(Y==0 Y==1 Y==originalImage.rows-2 Y==originalImage.rows-1)

SUM = 0;

else if(X==0 X==1 X==originalImage.cols-2 X==originalImage.cols-1)

SUM = 0;

/* Convolution starts here */

else {

for(I=-2; I<=2; I++) {

for(J=-2; J<=2; J++) {

SUM = SUM + (int)( (*(originalImage.data + X + I +

(Y + J)*originalImage.cols)) * MASK[I+2][J+2]);

}

}

}

if(SUM>255) SUM=255;

if(SUM<0) SUM=0;

*(edgeImage.data + X + Y*originalImage.cols) = 255 - (unsigned char)(SUM);

fwrite((edgeImage.data + X + Y*originalImage.cols),sizeof(char),1,bmpOutput);

}

}

SOBEL Edge Detection with C++ Code

Based on this one-dimensional analysis, the theory can be carried over to two-dimensions as long as there is an accurate approximation to calculate the derivative of a two-dimensional image. The Sobel operator performs a 2-D spatial gradient measurement on an image. Typically it is used to find the approximate absolute gradient magnitude at each point in an input grayscale image. The Sobel edge detector uses a pair of 3x3 convolution masks, one estimating the gradient in the x-direction (columns) and the other estimating the gradient in the y-direction (rows). A convolution mask is usually much smaller than the actual image. As a result, the mask is slid over the image, manipulating a square of pixels at a time.

Based on this one-dimensional analysis, the theory can be carried over to two-dimensions as long as there is an accurate approximation to calculate the derivative of a two-dimensional image. The Sobel operator performs a 2-D spatial gradient measurement on an image. Typically it is used to find the approximate absolute gradient magnitude at each point in an input grayscale image. The Sobel edge detector uses a pair of 3x3 convolution masks, one estimating the gradient in the x-direction (columns) and the other estimating the gradient in the y-direction (rows). A convolution mask is usually much smaller than the actual image. As a result, the mask is slid over the image, manipulating a square of pixels at a time.void sobel( int *a, int sx, int sy, int *out)

{

// (In) a: The image (row major) containing elements of int.

// (In) sx: size of image along x-dimension.

// (In) sy: size of image along y-dimension.

// (Out) out: The output image with dimensions (sx-2, sy-2).

int i, j, ctr = 0;

int *p1, *p2, *p3;

p1 = a;

for( j = 0; j <>

Robotic Fly for Surveillance

This is a major advancement in robotics because it’s the first 2 winged robot built to such a small scale that it can pass as a real fly but there are still some challenges.

At the moment, Wood’s fly is limited by a tether that keeps it moving in a straight, upward direction. The researchers are currently working on a flight controller so that the robot can move in different directions.

The researchers are also working on an onboard power source. (At the moment, the robotic fly is powered externally.) Wood says that a scaled-down lithium-polymer battery would provide less than five minutes of flying time.

Tiny, lightweight sensors need to be integrated as well. Chemical sensors could be used, for example, to detect toxic substances in hazardous areas so that people can go into the area with the appropriate safety gear. Wood and his colleagues will also need to develop software routines for the fly so that it will be able to avoid obstacles.

Tuesday, April 21, 2009

Comparison between PTCL and Link Dot Net Dsl ( Ptcl vs. Ldn )

Watch FREE Movies, Cricket Matches, Pakistani Plays & listen to all types of Music, Religious and Children's content exclusively available to PTCL Broadband Users.

http://entertainment.ptcl.net/

LDN:

LINKdotNET, after its successful launch in Pakistan extends another step towards changing the way you live by offering enhanced DSL packages for consumers.

http://mylink.net.pk/

Download speed:

PTCL student package 839 Rs. = 100 kbps average

LDN student package 885 Rs. = 28 kbps average ( if you are lucky enough max. you will get is 48 kbps)

Charges:

LDN costs more than PTCL.

Disconnection Issue:

Disconnection issue is almost same and mostly depends on your ptcl landline. But if you have LDN DSL service then you have to wait for the Ptcl lineman if there arrives any problem in your land line. so another plus point for PTCL.

Smart TV:

You can get other facilities such as smart TV with PTCl.

Conclusion:

I myself user of Link Dot net student package suggest you shuld go for PTCL Dsl if it is available in your area.

Stepper Motor code with proteus design

# include

# include

void delay(void);

void main (void)

{

int i;

for(i=0;i<(270/(1.8*4));i++)

{

P2_0=0;

delay();

P2_0=1,P2_1=0;

delay();

P2_1=1,P2_2=0;

delay();

P2_2=1,P2_3=0;

delay();

P2_3=1;

}

for(i=0;i<(270/(1.8*4));i++)

{

P1_0=0;

delay();

P1_0=1,P1_1=0;

delay();

P1_1=1,P1_2=0;

delay();

P1_2=1,P1_3=0;

delay();

P1_3=1;

}

for(i=0;i<(180/(1.8*4));i++)

{

P3_0=0;

delay();

P3_0=1,P3_1=0;

delay();

P3_1=1,P3_2=0;

delay();

P3_2=1,P3_3=0;

delay();

P3_3=1;

}

for(i=0;i<(270/(1.8*4));i++)

{

P2_3=0;

delay();

P2_3=1,P2_2=0;

delay();

P2_2=1,P2_1=0;

delay();

P2_1=1,P2_0=0;

delay();

P2_0=1;

}

for(i=0;i<(270/(1.8*4));i++)

{

P1_4=0;

delay();

P1_4=1,P1_5=0;

delay();

P1_5=1,P1_6=0;

delay();

P1_6=1,P1_7=0;

delay();

P1_7=1;

}

while(1);

}

void delay(void)

{

TMOD=0x10;

TL1=0x58;

TH1=0x9E;

TR1=1;

while ( TF1 == 0 );

TR1=0;

TF1=0;

}

Friday, April 17, 2009

SOURCE CODE " LCD plus Keypad interfacing with Atmel 8051 in C"

# include

# include

int g;

sbit row1 = P3^0;

sbit row2 = P3^1;

sbit row3 = P3^2;

sbit row4 = P3^3;

char scan_key(void)

{

P3 = 15;

while(1)

{

if ( P3 != 15 ) break;

}

P3 = 239;

if( row1 == 0 ){g = 1; return '1';}

if( row2 == 0 ){g = 4; return '4';}

if( row3 == 0 ){g = 7; return '7';}

if( row4 == 0 ){ return '*';}

P3 = 223;

if( row1 == 0 ){g = 2; return '2';}

if( row2 == 0 ){g = 5; return '5';}

if( row3 == 0 ){g = 8; return '8';}

if( row4 == 0 ){g = 0; return '0';}

P3 = 191;

if ( row1 == 0 ){g = 3; return '3';}

if ( row2 == 0 ){g = 6; return '6';}

if ( row3 == 0 ){g = 9; return '9';}

if ( row4 == 0 ){ return '#';}

}

int main()

{

int i, c, f = 0,ali = 0;

char a;

P1 = 56, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for (i = 0;I < 255; i++);

P1 = 15, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for (i = 0; I < 255; i++);

P1 = 1, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(i = 0;i < 255; i++);

P1 = 28, P2_1 = 0, P2_2 = 0, P 2_3 = 1;

P2_3 = 0;

for(i = 0;i < 255; i++);

P1 = 129, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(i = 0;i < 255; i++);

while (1)

{

a = scan_key();

if( a == '*')

{

P1 = 1, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

f = 0;

g = 0;

for( c = 0;c < 32000; c++);

}

if( a != '*' && a != '#')

{

P1 = a, P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(c = 0;c < 32000; c++);

f = f + g;

f = f * 10;

}

if( a == '#')

{

f = f/10;

a = f;

P1 ='=', P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for( c = 0;c < 32000; c++);

f = 0;

g = 0;

goto label;

}

while (0)

{

label:

P1 = a, P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for( c = 0; c <32000 ; c++);

P1 =' ', P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for( c = 0; c < 32000; c++);

}

}

}

How to use a keypad ( keypad interfacing )

There are basically two methods commonly known for keypad interfacing in one

5 volts is supplied and the row and columns of the pressed key are made 0 in this way key is scanned.

But the method we used is that we made the all rows 1 (means supplied 5 (Volts) and then pressed the key and the pressed row and columns are scanned simultaneously but indirectly.

We used 4 * 3 keypad as 4 * 4 was not available in market. so we have 4 rows and 3 columns in our keypad which is actually a digital phone keypad we used a converter at the end to solder the wires with it and directly insert those in the bread board.

Row 1 to row 4 are attached to Pin 0 to pin3 of port 3 and similarly the pin 4 to pin 6 are used for columns last pin is not used of port 3.

Key scanning is quite simple the row and columns when made short through the keypad enables a single key and display it on the LCD.

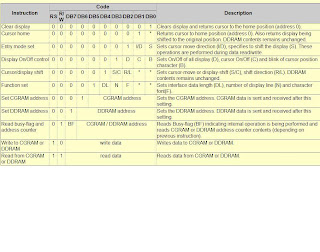

LCD pin descriptions

While Vcc and Vss provide +5V and ground respectively, Vee is used for controlling LCD contrast.

RS, register select:

There are two very important registers inside LCD. The RS pin is used for their selection as follows. If RS =0, the instruction command code register is selected, allowing the user to send a command such as clear display, cursor at home, etc. If RS=1, the data register is selected, allowing the user to send data to be displayed on the LCD.

R/W, read/write:

R/W input allows the user to write information to the LCD or read information from it. R/W=1 when reading; R/W=0 when writing.

E,enable:

The enable pin is used by the LCD to latch information presented to its data pins. When data is supplied to data pins, a high-to-low pulse must be applied to this pin in order for the LCD to latch in the data present at the data pins. This pulse must be a minimum of 450 ns wide.

D0-D7:

The 8-bit data pins, D0-D7, are used to send information to the LCD or read the contents of the LCD’s internal registers.

To display letters and numbers, we send ASCII codes for the letters A-Z, a-z and numbers 0-9 to these pins while making RS=1.

These are also instructions command codes that can be sent to the LCD to clear the display or force the cursor to the home position or blink the cursor.

Introduction to ATMEL 89C51

The ATMEL 89C51 is a low power, high performance CMOS 8-bit microcontroller with 4K bytes of In System Programmable Flash Memory. It is suitable for cost effective embedded systems.

It has following standard features:

4K bytes of flash memory, 128 bytes of RAM, 32 I/O pins, two 16 bit timers/counters, a full duplex serial port, on chip oscillator, and clock circuitry, six interrupt sources, standard 40 pin package, external memory interface.

RST:

This is the reset input. This input should normally be at logic 0. A reset is accomplished by holding the RST pin for at least two machine cycles. Power-on reset is normally performed by connecting an external capacitor and a resistor this pin.

P3.0:

This is bi-directional I/O pin with an interrupt pull-up resistor. This pin also acts as the data receive input (RXD) when using the device as serial data.

P3.1:

This is bi-directional I/O pin with an internal interrupt pull-up resistor. This pin also acts as the data transmit output (TXD) when sending serial data.

XTAL1 and XTAL2:

These pins are where external crystal oscillator should be connected for the operation of the internal oscillator. Normally two 33pF capacitors are connected with the crystal as shown. A machine cycle is obtained by dividing the crystal frequency by 12. Thus, with a 12MHz crystal, the machine cycle is 1us. Most machine instructions execute in one machine cycle.

P3.3:

This is a bi-directional I/O pin. This pin also is the external interrupt (INT1) pin.

P3.4:

This is bi-directional pin. This pin is also used for the counter 0 input (TO) pin.

P3.5:

This is bi-directional pin. This is also used for counter 1 input (T1) pin.

GND:

It is the ground pin.

P3.6:

This is bi-directional pin. It is also the external memory write (WR) pin.

P3.7:

This is bi-directional pin. This pin is also the external data memory read (RD) pin.

P1.0:

This is bi-directional pin. It has no internal pull-resistors. P1.1:

This is bi-directional pin. It has no internal pull-up resistors.

P1.2 to P1.7:

These are remaining bi-direction pins. These pins have internal pull-up resistors.

VCC:

It is the supply voltage. 5V is supplies here.

P0.0 to P0.7:

These are eight I/O pins. These have no internal pull-up resistors.

P2.0 to P2.7:

These are the eight I/O pins. These have internal pull-up resistors.

EA/VPP:

This is the external access enable pin on the 8051. EA should be connected to VCC for internal programme executions. This pin also receives the programming voltage during programming.

PSEN:

This is the programme store enable pin. This is used when using external memory.

ALE/PROG:

This is the address latch enable pin.

· A 2X16 double line LCD, with backlight option and 16 pin package. It supports HD44780 standard.

· A 4X3 telephone keypad. Having 4 rows and 3 columns.

· A crystal oscillator of 12MHz frequency.

· Two 33pF Tantalum bead Capacitors.

· One 10uF capacitor and one 8.2K ohm 0.25 w resistor for reset.

· A 500 ohm resistor for adjusting the contrast.

· A regulated 5V power supply with LM7805 regulator for providing a constant voltage output.

· Assembled on bread-board.

CONTENTS of Atmel Project keypad and 2 line LCD

Ø List of components used.

Ø Introduction about ATMEL 89C51 microcontroller.

Ø A brief insight into the world of Liquid Crystal Display (LCD).

Ø How to use a Keypad.

Ø Schematic of the project.

2. PROGRAMME DETAILS

Ø Source code (.c file).

Ø What is inside our code and how does our amazing code work!

3. DIFFICULTIES AND HURDLES

IN OUR WAY

LCD plus Keypad interfacing with 8051 Atmel

Our code is quite simple we used simple commands to initialize the LCD all the data transported is in decimal. RS, RW and E pins of LCD are handled separately. We not used much function in our program as they usually make the program tricky. We tried to make the program as much simple as possible.

LCD:

We used blue LCD 16 * 2, JHD 1602 with 16 pins (actually 14 last two are for back light)

LCD interfacing is quite simple and easy to understand as each pin is handled and feeded with the data individually. This made the code quite easy.

PINs of LCD:

Data pins of LCD are connected to the port 1 of micro controller’s to the pin 1of port 2, RW pin 2 of port 2 and E to the pin 3 of port 2.

Pin 15 is directly given the 5 volts and pin 16 is grounded so that the back light can be utilized the basic function of the back light is to increase the visual ability of observer as we are using blue LCD and the text is written in grayish white which is not visible without the back light.

PIN 1, 2 & 3:

Pin 1 is grounded, pin 2 is connected to 5 volts & pin 3 of contrast is again grounded manually.

RS, RW & E PIN:

These are connected to port 2 and are feeded individually when ever and as needed. RS is 0 for command on LCD and 1 for data writing. RW is always 0 as we dont need it and E is changed from high to low pulse whenever the either command or data is sent to LCD.

EXPLAINATION:

FUNCTION SET:

1st of all function set command is feeded to the LCD data ports are feeded with 56 dec or 38 hex. That conveys that we are using 16 * 2 LCD. RS is zero. RW is zero. Enable pin is feeded with 1st high and then low signal.

P1 = 56, P2_1 = 0, P2_2 = 0,P2_3 = 1;

P2_3 = 0;

DISPLAY & Cursor ON OFF:

Secondly display on off and cursor on off (also blinking)command is sent to the LCD where data pins are feeded with 15 dec. RS is zero. RW is zero. Enable pin is feeded with 1st high and then low signal.

P1 = 15, P2_1 = 0, P2_2 = 0,P2_3 = 1;

P2_3 = 0;

CLEAR DISPLAY:

Data pins are feeded with 1. RS is zero. RW is zero. Enable pin is feeded with 1st high and then low signal to clear display.

P1 = 1, P2_1 = 0,P2_2 = 0,P2_3 = 1;

P2_3 = 0;

DISPLAY SHIFT:

To shift cursor right data pins are feeded with 28.RS is zero. RW is

zero. Enable pin is feeded with 1st high and then low signal.

P1 = 28, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

STARTING POSITION:

data pin is feeded with 81 hex or 129 decimal according to the Mazzidi`s book in assembly language. RS is zero. RW is zero. Enable pin is feeded with 1st high and then low signal.

P1 = 129, P2_1 = 0, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

Now LCD`s initializing is complete....

KEYPAD INTERFACING:

Keypad interfacing involves a function call which also enables us to handle the debouncing problem delay is there but after the display of the key pressed. Proper use of delay by using for loop made it quite practical.

Whenever there is need to input data a function is called which calculate the key pressed from the keypad and display it or use the command as desired.

EXPLAINATION:

The data is saved in another variable 'g' at the same time transferred to variable 'f' and 'f' is multiplied with 10 and again if key is scanned the value of f changes by addition on new value of 'g' in 'f' i.e.- "f=f+g" again it is multiplied with 10 and procedure continues until enter or reset key is not pressed.

if(a!='*' && a!='#')

{

P1 = a, P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(c=0;c<32000;c++);

f=f+g;

f=f*10;

}

ENTER:

Whenever enter key is pressed the value of 'f' is divided by 10 and the calculated no (which is actually the ASCII code) is sent to the LCD to display (this portion is in last of the code which can be operated by GOTO statement not else) its corresponding character. Again the value of 'f' and 'g' are made zero.

"f=0;

g=0;

"

if(a=='#')

{

f=f/10;

a=f;

P1 ='=', P2_1 = 1,P2_2 = 0,P2_3 = 1;

P2_3 = 0;

for(c=0;c<32000;c++);

f=0;

g=0;

goto label;

}

while(0)

{

label:

P1 = a, P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(c=0;c<32000;c++);

P1 =' ', P2_1 = 1, P2_2 = 0, P2_3 = 1;

P2_3 = 0;

for(c=0;c<32000;c++);

}

RESET:

Whenever the reset key is pressed clear display command is executed and values of 'f' and 'g' are made zero.

"f=0;

g=0;

"

if(a=='*')

{

P1 = 1, P2_1 = 0,P2_2 = 0,P2_3 = 1;

P2_3 = 0;

f=0;

g=0;

for(c=0;c<32000;c++);

}

This all procedure is in wild loop. So in this way we have a numeric keyboard every character can be written on LCD with its corresponding ASCII code.

Sunday, March 29, 2009

INTERESTING ] & [ USEFUL ] [ LINKS ]

ENCYLOPEDIA'S

USEFUL SITES FOR WEBMASTER

FUN BLOGS

TOP 3 GLOBAL SITES

FREE PICTURE SHARING SITES

TOP STORAGE AND FILE SHARING SITES

WATCH FREE MOVIES ONLINE

TOP 3 TORRENT SITES

TOP ENTERTAINMENT SITES

INTERNET SUCCESSFUL STORIES

FREE SOFTWARE SITES

FREE AUDIO BOOKS

Paper on Face Recognition

A. K. Jain, A. Ross, and S. Prabhakar, "An Introduction to Biometric Recognition," IEEE Trans. on CSVT, Special Issue on Image- and Video- Based Biometrics, August, 2003. W. Zhao, R. Chellappa, A. Rosenfeld, and P. J. Phillips,(2000) "Face Recognition: A Literature Survey", UMD CfAR Technical Report CAR-TR948. R. Chellappa, C.L. Wilson, and S. Sirohey, “Human and machine recognition of faces: a survey”, Proceedings of the IEEE, Vol. 83, pp. 705-741, May 1995. M. Yang, D. J. Kriegman, and N. Ahuja, "Detecting Face in Images: A Survey", IEEE Trans. on PAMI, Vol. 24, No. 1, pp. 34-58, Jan. 2002. E. Hjelmas, "Face Detection: A survey", Computer Vision and Image Understanding, Vol 83, pp. 236-274, 2001. J. Zhang, Y. Yan, and M. Lades, “Face Recognition: Eigenface, Elastic Matching, and Neural Net”, Proceedings of IEEE, Vol. 85, No. 9, pp.1423-1435, Sep. 1997.n R. Brunelli and T. Poggio, “Face recognition: features versus templates”, IEEE Trans. on PAMI, Vol. 15, No. 10, pp. 1042-1052, Oct. 1993. XM2VTS Samy Bengio, Johnny Mariéthoz, and S. Marcel, "Evaluation of Biometric Technology on XM2VTS ", IDIAPRR 21, IDIAP, 2001. J. Matas, M. Hamouz, K. Jonsson, J. Kittler, C. Kotropoulos, and A. Tefas ,"Comparison of Face Verification Results on the XM2VTS Database", Proceedings of ICPR, 2000. Feret P. J. Phillips, P. Grother, R. J. Micheals, D. M. Blackburn, E. Tabassi, M. Bone, "Face Recognition Vendor Test: Evaluation Reprot" P. J. Phillips, P. Grother, R. J. Micheals, D. M. Blackburn, E. Tabassi, M. Bone, "Face Recognition Vendor Test: Overview and Summary" P. J. Phillips, P. Grother, R. J. Micheals, D. M. Blackburn, E. Tabassi, M. Bone, "Face Recognition Vendor Test: Technical Appdndices" P. J. Phillips, H. Moon, S. A. Rizvi, and P. J. Rauss, "The Feret Evaluation Methodology for Face-Recognition Algorithms", IEEE Trans. on PAMI, Vol. 22, No. 10, Oct. 2000. P. J. Phillips, H. Moon, and S. A. Rizvi, "The Feret Evaluation Methodology for Face-Recognition Algorithms", Proceedings of Computer Vision and Pattern Recognition, pp. 137-143, 1997. P. J. Phillips, H. Moon, S. A. Rizvi, and P. J. Rauss, “The FERET Evaluation”, in Face Recognitioin: From Theory to Applications, H. Wechsler, P. J. Phillips, V. Bruce, F.F. Soulie, and T.S. Huang, Eds., Berlin: Springer-Verlag, 1998. P. J. Phillips, P. J. Rauss, and S. Z. Der, “FERET (Face Recognition Technology) Recognition Algorithm Development and Test Results”, October 1996. Army Research Lab technical report 1995. P. J. Phillips, H. Moon, P. J. Rauss, and S. Rizvi, “The FERET Evaluation Methodology for Face Recognition Algorithms”. S. Rizvi, P. J. Phillips and H. Moon, “The FERET Verification Testing Protocol for Face Recognition Algorithms”. "The Face Recognition Technology (Feret) Database" Eigenface M. Turk, "A Random Walk Through Eigenface", IEICE Trans. INF. & SYST., Vol. E84-D, No. 12, Dec. 2001. M. Turk and A. Pentland, "Eigenfaces for recognition", J. of Cognitive Neuroscience, Vol. 3, No. 1, pp. 71-86, 1991. M. Turk and A. Pentland, “Face recognition using eigenfaces”, Proceedings of IEEE, CVPR, pp. 586-591, Hawaii, June, 1991. I. Craw, N. Costen, T. Kato, and S. Akamatsu, “How Should We Represent Faces for Automatic Recognition?” IEEE Trans. on PAMI, Vol. 21, No.8, pp. 725-736, August 1999. A. Pentland, B. Moghaddam, T. Starner, “View-based and modular eigenspaces for face recognition”, Proceedings of IEEE, CVPR, 1994. B. Moghaddam and A. Pentland, "Face Recognition using View-Based and Modular Eigenspace", Automatic Systems for the Identification and Inspection of Humans, 2277, 1994. P. Hall, D. Marshall, and R. Martin, "Merging and Splitting Eigenspace Models," IEEE Trans. on PAMI, Vol. 22, No. 9, pp. 1042-1049, 2002. A. Yilmaz and M. G?kmen, “Eigenhill vs. eigenface and eigenedge”, Pattern Recognition, Vol. 34, pp. 181-184, 2001. J. Yang, and J. Yang, "From Image Vector to Matrix: A Straightforward Image Projection Technique-IMPCA vs. PCA", Pattern Recognition, Vol. 35, 2002. T. Shakunaga, and K. Shigenari, "Decomposed Eigenface for Face Recognition under Various Lighting Conditions", Proceedings of CVPR, pp. 864-871, 2001. J. B. Tenenbaum, and W. T. Freeman, "Separating Style and Sontent with Linear Models," Neural Computation, Vol. 12, pp. 1247-1283, 2000. W. T. Freeman, and J. B. Tenenbaum, "Learning Bilinear Models for Two-Factor Problems in Vision," Proceedings of CVPR, pp. 554-560, 1997. M. Alex, O. Vasilescu, and D. Terzopoulos, "Multilinear Analysis of Image Ensembles: TensorFaces," Proceedings of ECCV, pp. 447-460, 2002. H. Kim, D. Kim, and S. Y. Bang, "Face Retrieval Using 1st- and 2nd-Order PCA Mixture Model," Proceedings of ICIP, pp. 605-608, 2002. D. S. Turaga, and T. Chen, "Face Recognition Using Mixtures of Principal Components," Proceedings of ICIP, pp. 101-104, 2002. A. Lemieux, and M. Parizeau, "Experiments on Eigenfaces Robustness", Proceedings of ICPR, pp. 421-424, 2002. C. Chennubhotla, and A. Jepson, "Sparse PCA Extracting Multi-scale Structure from Data", Proceedings of ICCV, pp. 641-647, 2001. W. S. Yambor, B. A. Draper, and J. R. Beveridge, "Analyzing PCA-based Face Recognition Algorithm: Eigenvector Selection and Distance Measures", R. Gross, J. Yang, and A. Waibel, "Growing Gaussian Mixture Models for Pose Invariant Face Recognition," Proceedings of ICPR, pp. 1088-1091, 2000. C. Ki-Chung, K. S. Cheol, K. S. Ryong, “Face Recognition Using Principal Component Analysis of Gabor Filter Responses”, Proceedings of International Workshop on Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems, pp. 53-57, 1999. H. Boratschnig, L. Paletta, M. Prantl and Axel Pinz, "Active Object Recognition in Parametric Eigenspace", in: P.H. Lewis and M.S. Nixon (ed), Proc. Brit. Mach. Vision. Conf. pp 629-638, 1998. H. M. El-Bakry, M. A. Abo-Elsoud, "Integrating Fourier Descriptors and PCA with Neural Networks for Face Recognition", Seventh National Radio Science Conference, Feb. 2000. H. Peng and D. Zhang, "Dual EIgenspace Method for Human Face recognition", IEEE Electronics Letters, Vol. 33, No. 4, pp.283-284, 1997. L. J. Shen and H. C. Fu, "A Principal Component Based BDNN for Face Recognition", International Conference on Neiral Network, Vol. 3, pp. 1368-1372, 1997. S. Lee, S. Jung, J. Kwon, and S. Hong, "Face Detection and Recognition Using PCA", Proceedings of the IEEE Region 10 Conference, Vol. 1, pp. 84-87, 1999. E. Lizama, D. Waldoestl, and B. Nickolay, "An Eigenfaces-Based Automatic Face Recognition System", IEEE International Conference on Computational Cybernetics and Simulation, Vol. 1, pp. 174-177, 1997. A. Z. Kouzani, F. He, and K. Sammut, "MultiResolution Eigenface-Components", Proceedings of IEEE Region 10 Annual Conference. Speech and Image Technologies for Computing and Telecommunications, Vol. 1, pp. 353-356, 1997. P. Navarrete and J. Ruiz-del-Solar, "Eigenspace-based Recognition of Faces: Comparisons and A New Approach", Proceeddings of Image Analysis and Processing, pp. 42-47, 2001. Fisher Face (LDA) V. Belhumeur, J. Hespanda, and D. Kiregeman, “Eigenfaces vs. fisherfaces: recognition using class specific linear projection”, IEEE Trans. on PAMI, Vol. 19, No. 7, pp. 711-720, July 1997. W. Zhao, R. Chellapa, and P. Philips, “Subspace linear discriminant analysis for face recognition”, Technical Report CAR-TR-914, 1996. T. Cooke, "Two Variations on Fisher's Linear Discriminant for Pattern Recognition", IEEE Trans. on PAMI, Vol. 24, No. 2, pp. 268-273, Feb. 2002. Aleix M. Martinez, and Avinash C. Kark, “PCA versus LDA”, IEEE Trans. on PAMI, Vol. 23, No. 2, pp. 228-233, Feb. 2001. M. Loog, R.P.W. Duin and R. Haeb-Umbach, "Multiclass Linear Dimension Reduction by Weighted Pairwise Fisher Criteria", IEEE Trans. PAMI, Vol. 23, No. 7, pp. 762-766, July 2001. K. Etemad, and R. Chellapa, “Discriminant analysis for recognition of human faces”, Proceedings of International Conference on Acoustics, Speech and Signal Processing, pp. 2148-2151, 1996. W. Zhao, R. Chellappa, and N. Nandhakumar, “Empirical performance analysis of linear discriminant classifiers”, Proceedings of CVPR, pp. 164-169, 1998. W. Zhao, R. Chellapa, and A. Krishnaswamy, "Discriminant analysis of principal components for face recognition", Proceedings of Automatic Face and Gesture Recognition, pp. 336-341, 1998. W. Zhao, A. Krishnaswamy, R. Chellappa, D. Swet, and J. Weng, "Discriminant Analysis of Principal Components for Face Recognition", Face Recognition: From Theory to Application, H. Wechsler, P.J. Phillips, V. Bruce, F.F. Soulie, and T.S. Huang, eds., pp. 73-85, Berlin: Springer-Verlag, 1998. D.L. Swets and J. Weng, "Using Discriminant Eigenfeatures for Image Retrieval", IEEE Trans. on PAMI, Vol. 18, No. 8, pp. 831-836, 1996. R.P.W. Duin,and R. Haeb-Umbach, "Multiclass Linear Dimension Reduction by Weighted Pairwise Fisher Criteria", IEEE Trans. on PAMI, Vol. 23, No. 7, pp. 762-766, July, 2001. C. Liu, and H. Wechsler, “A shape- and texture-based enhanced fisher classifier for face recognition”, IEEE Trans. on Image Processing, Vol. 10, No. 4, pp. 598-608, April, 2001. C. Liu, and H. Wechsler, “Gabor Feature Based Classification Using the Enhanced Fisher Linear Discriminant Model for Face Recognition”, IEEE Trans. on Image Processing, Vol. 11, No. 4, pp. 467-476, April, 2002. C. Liu, and H. Wechsler, "Gabor Feature Classifier for Face Recognition", Proceedings of ICCV, Vol. 2, pp. 270-275, 2001. H. Yu and J. Yang, “A direct LDA algorithm for high-dimensional data-with application to face recognition”, Pattern Recognition, Vol. 34, pp. 2067-2070, 2001. L. Chen, H. Liao, M. Ko, J. Liin, and G. Yu, "A New LDA-based Face Recognition System Which can Solve the Samll Sample Size Problem", Pattern Recognition, Vol. 33, No. 10, pp. 1713-1726, Oct. 2000. C. Liu and H. welchsler, "Enhanced Fisher Linear Discriminant Models for Face Recognition", Proceedings of ICPR, Vol. 2, pp. 1368-1372, 1998. H. Kim, D. Kim, and S. Y. Bang, "Face Recognition Using LDA Mixture Model", Proceedings of ICPR, pp. 486-489, 2002. Y. Bing, J. Lianfu, and C. Ping, "A New LDA-based Method for Face Recognition", Proceedings of ICPR, pp. 168-171, 2002. Y. Bing, C. Ping, and J. Lianfu, "Recognizing Faces with Expressions: Within-class Space and Between-class Space", Proceedings of ICPR, pp. 139-142, 2002. R. Huang, Q. Liu, H. Lu, and S. Ma, "Solving Small Sample Size Problem of LDA", Proceedings of ICPR, pp. 29-32, 2002. H. Kim, D. Kim, and S. Y. Bang, "Extensions of LDA by PCA Mixture Model and Class-Wise Features," Pattern Recognition, pp. 1095-1105, Vol. 36, 2003. Bayesian B. Moghaddam, "Principal Manifolds and Probabilistic Subspaces for Visual Recognition", IEEE Trans. on PAMI, Vol. 24, No. 6, pp. 780-788, June 2002. B. Moghaddam, T. Jebara, and A. Pentland, “Bayesian Face Recognition”, Pattern Recognition, Vol. 33, pp. 1771-1782, 2000. B. Moghaddam, and A. Pentland, “Probabilistic Visual Learning for Object Representation”, IEEE Trans. on PAMI, Vol. 19, No. 7, pp. 775-779, July, 1997. B. Moghaddam, C. Nastar, and A. Pentland, "Bayesian Face Recognition with Deformable Image Models", Proceedings of Image Analysis and Processing, pp. 26-35, 2001. B. Moghaddam, W. Wahid, and A. Pentland, “Beyond eigenface: probabilistic matching for face recognition”, Proceedings of Int’l Conf. on Automatic Face and Gesture Recognition (FG’98), pp. 30-35, April, 1998. Other subspace methods Pong C. Yunen, and J.H. Lai, “Face representation using independent component analysis”, Pattern Recognition, Vol. 35, pp. 1247-1257, 2002. T. Kamei, "Face Retrieval by An Adaptive Mahalanobis Distance Using A Confidence Factor," Proceedings of ICIP, pp. 153-156, 2002. P. Comon, “Independent Component Analysis-A New Concept?”, Signal Processing, Vol. 36, pp. 287-314, 1994. M. S. Bartlett, T.J. Sejnowski, “Independent of Face Images: A Representation for Face Recognition”, Proceedings of the Fourth Annual Joint Symposium on Neural Computation, Pasadena, CA, May 17, 1997. B. J. Frey, A. Colmenarez, and T. S. Huang, “Mixtures of local linear subspaces for face recognition”, CVPR, 1998. D. Swets, J. Weng, “Using discriminant eigenfeatures for image retrieval”, IEEE Trans. on PAMI, Vol. 16, No. 8, pp. 831-836, August, 1996. C. Liu, and H. Wechsler, “Evolutionary persuit and its application to face recognition”, IEEE Trans. on PAMI, Vol. 22, No. 6, pp. 570-582, June, 2000. M. Yang, N. Ahuja and D. Kriegman, “Face recognition using kernel eigenfaces”, Proceedings of ICIP, Vol. 1, pp. 37-40, 2000. Q. Liu, R. Huang, H. Lu and S. Ma, “Kernel-Based Optimized Feature Vectors Selection and Discriminant Analysis for Face Recognition”, Proceedings of ICPR, pp. 362-365, 2002. A. Levy, and M. Lindenbaum, “Sequential Karhunen-Loeve basis extraction and its application to images”, IEEE Trans. on Image Processing, Vol. 9, No. 8, pp. 1371-1374, August. 2002. A. Levy, and M. Lindenbaum, “Sequential Karhunen-Loeve basis extraction and its application to images”, Proceedings of ICIP, Vol. 2, pp. 456-460, 1998. K. Okada, C. Malsburg, "Analysis and Synthesis of Human Faces with Pose Variations by a Parametric Piecewise Linear Subspace Method", Proceedings of CVPR, pp. 761-768, 2001. S. Z. Li, R. Xiao, Z. Li and H. Zhang, "Nonlinear Mapping from Multi-View Face Patterns to a Gaussian Distribution in a Low Dimensional Space", Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems, 2001. Proceedings. IEEE ICCV Workshop on , pp. 47-54, 2001. S. Z. Li, "Face Recognition Based on Nearest Linear Combinations", Proceedings of CVPR, pp. 839-844, 1999. K. Lee, J. Ho and D. Kriegman, "Nine Points of Light: Acquiring Subspaces for Face Recognition under Variable Lighting", Proceedings of CVPR, Vol. 1, pp. 519-526, 2001. J. Li, S. Zhou, and C. Shekhar, "A Comparison of Subspace Analysis for Face Recognition," Proceedings of ICASSP, pp. 121-124, 2003. Kernel B. Scholkopf, A. Smola, and K. Muller, "Nonlinear Component Analysis as a Kernel Eigenvalue Problem," Neural Computation, 10:1299-1310, 1998. B. Scholkopf, A. Smola, K. Muller, "Kernel Principle Component Analysis," In B. Scholkopf, C. Burges, and A. Smola, editors, Advances in Kernel Methods - Support Vector Learning, pages 327-352. MIT Press, 1999. G. Baudat, and F. Anouar, "Generalized Discriminant Analysis Using a Kernel Approach," Neural Computation, 12(10):2385-2404, 2000. S. Mika, G. Ratsch, J. Weston, B. Scholkopf, and K. Muller, "Fisher Discriminant Analysis with Kernels," In Y.-H. Hu, J. Larsen, E. Wilson, and S. Douglas, editors, Neural Networks for Signal Processing IX, pages 41--48. IEEE, 1999. J. Lu, K. N. Plataniotis, and A. N. Venetsanopoulos, "Face Recognition Using Kernel Direct Discriminant Analysis," IEEE Trans. on Neural Networks, pp. 117-126, Vol. 14, No. 1, Jan. 2003. M. Yang, N. Ahuja and D. Kriegman, “Face recognition using kernel eigenfaces”, Proceedings of ICIP, Vol. 1, pp. 37-40, 2000. Q. Liu, R. Huang, H. Lu and S. Ma, “Kernel-Based Optimized Feature Vectors Selection and Discriminant Analysis for Face Recognition”, Proceedings of ICPR, pp. 362-365, 2002. K. I. Kim, K. Jung, and H. J. Kim, "Face Recognition Using Kernel Principal Component Analysis," IEEE Signal Processing Letters, pp. 40-42, Vol. 9, No. 2, February, 2002. T. V. Gestel, J. Suykens, G. Lanckriet, A. lambrechts, B. D. Moor and J. Vandewalle, "Bayesian Framework for Least Squares Support Vector Machine Classifiers, Gaussian Processes and kernel Fisher Discriminant Analysis," Neural Computation, May 2002. J. A. K. Suykens, T. Van Gestel, J. Vandewalle, and B. De Moor, "A Support Vector Machine Formulation to PCA Analysis and its Kernel Version," ESAT-SCD-SISTA, Technical Report 2002-68, 2002. Independent Component Analysis M. S. Bartlett, J. R. Movellan, and T. J. Sejnowski, "Face Recognition by Independent Component Analysis," IEEE Trans. on Neural Networks, pp. 1450-1464, Vol. 13, No. 6, Nov. 2002. K. Back, B. A. Draper, J. R. Beveridge, and K. She, "PCA vs. ICA: A Comparative on the Feret Data Set" M, S. Bartlett, and T. J. Sejnowski, "Learning Viewpoint Invariant Representations for Faces in an Attractor Network," Presented at the 18th Congnitive Science Society Meeting, San Diego, CA, July 12-15, 1996. H. S. Sahambi, and K. Khorasani, "A Neural-Network Appearance -Based 3-D Object Recognition Using Independent Component Analysis," IEEE Trans. on Neural Networks, pp. 138-149, Vol. 14, No. 1, Jan. 2003. A. J. Bell, and T. J. Sejnowski, "An Information-maximisation Approach to Blind Separation and Blind Deconvolution," Neural Computation, vol. 7, no. 6, pp. 1129--1159, 1995. T. Lee, M. S. Lewicki and T. J. Sejnowski, "ICA Mixture Models for Unsupervised Classification of Non-Gaussian Sources and Automatic Context Stitching in Blind Signal Separation," IEEE Trans. on PAMI, Vol. 22, No. 10, pp. 1-12, Oct. 2000. Mixture Gaussian C. Sanderson, "Face Processing & Frontal Face Verification," IDIAP Research Report, 2003. M. E. Tipping, "Mixtures of Probabilistic Principle Component Analyers," Neural Computation, vol. 11, pp. 443-482, 1999. M. E. Tipping and C. M. Bishop. "Probabilistic principal component analysis," Technical Report Woe-19, Neural Computing Research Group, Aston University, UK, 1997. C. M. Bishop, and M. E. Tipping, "A hierarchical Variable Model for Data Visualization," IEEE Trans. on PAMI, pp. 281-293, Vol. 20, No. 3,March, 1998. G. E. Hinton, P. Dayan, and M. Revow, "Modeling the Manifolds of Images of Handwritten Digits," IEEE Trans. on Neural Networks, pp. 65-74, Vol. 8, No. 1, Jan. 1997. G. E. Hintion, M. Revow, and P. Dayan, "Recognizing Handwritten Digits Using Mixtures of Local Models," Advances in Neural Information Processing Systems 7, pp. 1015-1022. Cambridge, Mass: MIT Press, 1995. Elastic bunch graph matching L. Wiskott, J.M. Fellous, N. Kruger and C. von der Malsburg, "Face Recognition by Elastic Bunch Graph Matching," IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol. 19, No.7, pp. 775-779, July, 1997. L. Wiskott, J. Fellous, N. Kruger, and C.von der Malsburg, "Face Recognition by Elastic Bunch Graph Matching". L. Wiskott, J.M. Fellous, N. Kruger and C. von der Malsburg, "Face Recognition by Elastic Bunch Graph Matching," Proceedings of ICIP, Vol. 1, pp. 129-132, 1997. A. Tefas, C. Kotropoulos, and L. Pitas, "Using Support Vector Machines to Enhance the Perfornance of Elastic Graph Matching for Frontal Face Authentication", IEEE Trans. on PAMI, Vol. 23, No. 7, pp.735-746, 2001. A. Tefas, C. Kotropoulos and I. Pitas, "Using Support Vector Machines for Face Authentication Based on Elastic Graph Matching", Proceeding of ICIP, Vol. 1, pp. 29-32, 2000. C. L. Kotropoulos, A. Tefas, and I. Pitas, "Frontal Face Authentication Using Discriminating Grids with Morphological Feature Vectors", IEEE Trans. on MultiMedia, Vol. 2, pp. 14-26, March 2000. C. Kotropoulos, A. Tefas, and I. Pitas, "Frontal Face Authentication Using Morphological Elastic Graph Matching", IEEE Trans. on Image Processing, Vol. 9, No. 4, pp. 555-560, April 2000. P. T. Jackway, and M. hamed Deriche, "Scale-Space Properties of the Multiscale Morphological Dilation-Erosion", IEEE Trans. on PAMI, Vol. 18, No. 1, pp. 38-51, Jan. 1996. M. Lades, J. C. Vorbruggen, J. Buhmann, J. Lange, C. Malsburg, R. P. Wurtz and W. Konen. “Distortion Invariant Object Recognition in the Dynamic Link Architecture”, IEEE Trans. on Computers, Vol 42, No. 3, pp 300-311, 1993. J. G. Gaugman, "Complete Discrete 2-D Gabor Transforms by Neural Networks for Image Analysis and Compression", IEEE Trans. on Acoustics, Speech and Signal Processing, Vol. 36, No. 7, July 1988. B. Duc, S. Fischer, and J. Bigun, "Face Authentication with Gabor Information on Deformable Graphs", IEEE Trans. on Image Processing, Vol. 8, No. 4, pp.504-516, April, 1999. N. Kruger, "An Algorithm for the Learning of Weights in Discrimination Functions Using a Priori Constraints", IEEE Trans. on PAMI, Vol. 19, No. 7, pp. 764-768, July 1997. R. Lee, J. Liu and J. You, "Face Recognition: Elastic Relation Encoding and Structural Matching", Proceedings of International Conference on Systems, Man, and Cybernetics, Vol. 2, pp. 172-177, 1999. L. Wisckott, "Phantom Faces for Face Analysis", Proceedings of ICIP, Vol. 3, pp. 308-311, 1997. I. R. Fasel, and M. S. Bartlett, "A Comparison of Gabor Filter Methods for Automatic Detection of Facial Landmarks," Proceedings of International Conference on Automatic Face and Gesture Recognition, pp. 242-246, 2002. W. Konen, and E. S. Kruger, "ZN-Face: A System for access control using automatic face recognition," Proceedings of Int. Workshop on Face and Gusture Recognitino, 1995. Locally Linear Embedding (LLE) S. T. Roweis and L. K. Saul, "Nonlinear Dimensionality Reduction by Locally Linear Embedding," Science 290, 2323-2326, 2000. L. K. Saul, S. T. Roweis, "An Introduction to Locally Linear Embedding". A. Hadid, O. Kouropteva, and M. Pietikainen, "Insupervised Learning Using Locally Linear Embedding: Experiments with Face Pose Analysis," Proceedings of ICPR, pp. 111-114, Vol. 1, 2002. L. K. Saul and S. T. Roweis, "Think Globally, Fit Locally : Unsupervised Learning of Nonlinear Manifolds," Technical Report MS CIS-02-18, University of Pennsylvania, 2003. O. Kouropteva, O. Okun, and M. Pietikainen, "Classification of Handwritten Digits Using Supervised Locally Linear Embedding algorithm and Support Vector Machine," J. B. Tenenbaum, V. D. Silva, and J. C. Langford, "A Global Geometric Framework for Nonlinear Dimensionality Reduction," Science, 290(5500):2319--2323, 2000. Active appearance model T. F. Cootes, G. J. Edwards, and C. J. Taylor, "Active Appearance Models," IEEE Trans. on PAMI, Vol. 23, No. 6, pp. 681-685, June, 2001. T. F. Cootes, and C. J. Taylor, "Constrained Active Appearance Models," Proceedings of ICCV, pp. 748-754, 2001. A. Lanitis, C. J. Taylor, and T. F. Cootes, “Automatic Interpretation and Coding of Face Images Using Flexible Models,” IEEE Trans. on PAMI, Vol. 19, No. 7, pp. 743-756, July 1997. T. F. Cootes and C. J. Taylor, “Statistical Model of Appearance for Computer Vision,” Technical Report, University of Manchester, Manchester M13 9PT, U. K., 2001. M. B. Stegmann, Active Appearance Models: Theory, Extensions and Cases, Informatics and Mathematical Modelling, Technical University of Denmark, DTU, Richard Petersens Plads, Building 321, DK-2800 Kgs. Lyngby, 2000. M. B. Stegmann, R. Fisker, B. K. Ersb鴏l, On Properties of Active Shape Models, Informatics and Mathematical Modelling, Technical University of Denmark, DTU, Richard Petersens Plads, Building 321, DK-2800 Kgs. Lyngby, 2000. N. Costen, T. Cootes, G. Edwards, and C. Taylor, "Simultaneous Extraction of Functional Face Subspaces,"Proceedings of CVPR, pp. 490-497, 1999. Y. Yacoob and L. Davis, “Recognizing Facial Expressions by Spatio-Temporal Analysis”, Proc. 12th Int’l Conf. Pattern Recognition, Vol. 1, pp. 747-749. Los Alamitos, Calif.: IEEE CS Press, 1994. M. Covell, “Eigen-points: Control-point location using principle component analysis”, In 2nd International Conference on Automatic Face and Gesture Recognition 1997, pp. 122-127, Killington, USA, 1996. T. F. Cootes, A. Hill, C. J. Taylor and J. Haslam, "The Use of Active Shape Model for Locating Structures in Medical Images". T. F. Cootes, G. Edwards and C. J. Taylor, "A Comparative Evaluation of Active Appearance Model Algorithms", In P. Lewis and M. Nixon, editors, 9 th British Machine Vison Conference, volume 2, pages 680--689, Southampton, UK, Sept. 1998. BMVA Press. A. Lantis, C.J. Taylor and T.F. Cootes, "A Unified Approach to Coding and Interpreting Face Images", Proceedings of ICCV, pp. 368-373, 1995. T. F. Cootes, C. J. Taylor, D. H. Cooper and J. Graham, "Active Shape Models-Their Training and Application", CVIU, Vol. 61, No. 1, pp. 38-58, Jan. 1995. Snake M. Kass, A. Wttkin, and D. Terzopoulos, "Sankes: Active Contour Models", International Journal of Computer Vision, pp. 321-331, 1998. SVM A. Tefas, C. Kotropoulos, and L. Pitas, "Using Support Vector Machines to Enhance the Perfornance of Elastic Graph Matching for Frontal Face Authentication", IEEE Trans. on PAMI, Vol. 23, No. 7, pp.735-746, 2001. Christopher, and J. C. Burges, "A Tutorial on Support Vector Machines for Pattern Recognition". Y. Li, S. Gong, amd H. Liddell, "Support Vector Regression and Classification Based Multi-View Face Detection and Recognition", In IEEE Int. Conf. Oo Face & Gesture Recognition, pages 300--305, France, 2000. Z. Li, S. Tang, "Face Recognition Using Improved Pairwise Coupling Support Vector Machine," Wavelet M. J. Lyons, J. Budynek, and S. Akamatsu, "Automatic classification of single facial images", IEEE Trans. on PAMI, pp. 1357-1362, 1999. K. Ma, and Xioou Tang, "Discrete wavelet face graph matching", Proceedings of ICIP, Vol. 2, pp. 217-220, 2001. M. J. Lyons, J. Budynek, A. Plante, and S. Akamatsu, "Classifying facial attributes using a 2-D Gabor wavelet representation and discriminant analysis", Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition, pp. 202-207, 2000. R. S. Feris, R. M. Cesar, and Jr., "Tracking facial features using Gabor wavelet networks", Proceedings XIII Brazilian Symposium on Computer Graphics and Image Processing, pp. 22-27, 2000, P. Kalocsai, H. Neven, and J. Steffens, "Statistical analysis of Gabor-filter representation", Proceedings of International Conference on Automatic Face and Gesture Recognition, pp. 360-365, 1998. B. Liu, and R. Chellappa, "Gabor attributes tracking for face verification", Proceedings of ICIP, Vol. 1, pp. 45-48, 2000. C. Liu, and H. Wechsler, "A Gabor feature classifier for face recognition", Proceedings of ICCV, Vol. 2, pp. 270-275, 2001. O. Ayinde, and Y. Yang, "Face Recognition Approach Based on Rank Correlation of Gabor-Filtered Images", Pattern Recognition, Vol. 35, pp. 1275-1289, 2002. Y. Fang, T. Tan, and Y. Wang, "Fusion of Global and Local Features for Face Verification", Proceedings of ICPR, pp. 382-385, 2002. B. Gokberk, L. Akarun, and E. Alpaydm, "Feature Selection for Pose Invariant Face Recognition", Proceedings of ICPR, 306-309, 2002. H. Wu, Y. Yoshida and T. Shioyama, "Optimal Gabor Filters for High Speed Face Identification", Proceedings of ICPR, pp. 107-110, 2002. B. Duc, S. Fischer and J. Bigun, "Face Authentication With Sparse Grid Gabor Information", Proceedings of ICASSP, Vol. 4, pp. 3053-3056, 1997. R. I. Fasel, M. S. Bartlett, and J. R. Movellan, "A comparison of Gabor filter methods for automatic detection of facial landmarks", Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition, pp. 242-246, 2002. V. Kruger, and A. Happe, and G. Sommer, "Affine real-time face tracking using Gabor wavelet networks", Proceedings of ICPR, 2002. K. Hotta, T. Mishima, T. Kurita, and S. Umeyama, "Face matching through information theoretical attention points and its applications to face detection and classification", Proceedings of International Conference on Automatic Face and Gesture Recognition, pp. 34-39, 2000. S. Cruz-Llanas, J. Ortega-Garcia, E. Martinez-Torrico, and J. Gonzalez-Rodriguez, "Comparison of feature extraction techniques in automatic face recognition systems for security applications", Proceedings of International Carnahan Conference on Security Technology, pp. 40-46, 2000. R. Fazel-Rezai, and W. Kinsner, "Image analysis and reconstruction using complex Gabor wavelets", 2000 Canadian Conference on Electrical and Computer Engineering, Vol. 1, pp. 440-444, 2000. DCT C. Sanderson, K.K. Paliwal, "Fast feature extraction method for robust face verification," Electronics Letters , Volume: 38 Issue: 25 ,5, pp. 1648-1650, Dec 2002. Y. Nagasaka, A. Yoshikawa, and N. Suzumura, "Discrimination among Individuals Using Orthogonal Transformed Face Image," Proceedings of IEEE International Conference on Informaiton, Communications and Processing, Sep. 1997. Z. Pan, A. G. Rust, and H. Bolouri, "Image Redundancy for Neural Network Classification Using Discrete Cosine Transforms," Neural Networks, 2000. IJCNN 2000, Proceedings of the IEEE-INNS-ENNS International Joint Conference on , Volume: 3 , 2000. Edge Y. Gao, and M. K.H. Leung, "Face Recognition Using Line Edge Map", IEEE Trans. on PAMI, Vol. 24, No. 6, pp. 764-779, June 2002. Y. Gao, M. K.H. Leung, "Line Segement Hausdorff Distance on Face Matching", Pattern Recognition, Vol. 35, pp. 361-371, 2002. D. P. Huttenlocher, G. A. Klanderman, and W. J. Rucklidge, "Comparing Images Using the Hausdorff Distance", IEEE Trans. on PAMI, Vol. 15, No. 9, pp. 850-862, Sep. 1993. B. Takacs, "Comparing Face Images Using The Modified Hausdorff Distance", Pattern Recognition, Vol. 31, No. 12, pp. 1873-1881, 1998. D. P. Huttemlocher, and W. J. Rucklidge, "A multi-resolution technique for comparing images using the Hausdorff distance", Proceedings of CVPR, pp. 705-706, 1993. D. P. Huttemlocher, G. A. Klanderman, and W. J. Rucklidge, "Comparing images using the Hausdorff distance under translation", Proceedins of CVPR, pp. 654-656, 1992. O. Jesorsky, K. J. Kirchberg, and R. W. Frischholz, "Robust Face Detection Using the Hausdorff Distance", Audio- and Video-Based Person Authentication - AVBPA 2001. Optical Flow X. Liu, T. Chen, and B.V.K. V. Kumar, "Face Authentication for Multiple Subjects Using Eigenflow," Pattern Recognition, Vol. 36, pp. 313-328, 2003. X. Liu, T. Chen, and B.V.K. V. Kumar, "On Modeling Variations for Face Authentication," Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition, pp. 384-389, 2002. Multi-Modalities A. Ross, and A. Jain, "Information Fusion in Biometrics," Pattern Recognition Letters, pp. 2115-2125, Vol. 24, 2003. U. M. Bubeck, "Multibiometric Authentication-An Overview of Recent Developments," 2003 L. Hong, A. K. Jain, and S. Pankanti, "Can Multibiometrics Improve Performance?", Proc. of AutoID'99, pp. 59-64, Summit (NJ), USA, Oct. 1999. L. Hong and A. K. Jain, "Integrating Faces and Fingerprints for Personal Identification," IEEE Trans. on PAMI, Vol. 20, No. 12, pp. 1295-1307, 1998. L. I. Kuncheva, C. J. Whitaker, C.A. Shipp, and R.P.W. Duin, "Is Independence Good for Combining Classifiers?", Proc. of ICPR, Vol. 2, pp. 168-171, 2001. A. K. Jain and A. Ross, "Learning User-Specific Parameters in a Multibiometrics System," Proc. of ICIP, 2002. E. S. Bigun, J. Bigun, B. Duc, and S. Fischer, "Expert Conciliation for Multimodal Person Authentication Systems Using Bayesian Statistics," Proc. AVBPA'97, pp. 291-300, March, 1997. A. Kumar, D. C. Wong, H. C. Shen, and A. K. Jain, "Personal Verification using Palmprint and Hand Geometry Biometric," Proc. of AVBPA'03. P. Verlinde, G. Chollet, "Comparing Decision Fusion paradigms Using k-NN Based Classifiers, Decision Trees and Logistic Regression in Multi-modal Identity Verification Application," Proc. of AVBPA'99, pp. 188-193, 1999. R. W. Frischholz, and U. Diechmann, "BioID: A Multimodal Biometric Identification System," IEEE Computer, Vol. 33, No. 2, pp. 64-68, 2000. U. Diechmann, P. Plankensteiner, and T. Wanger, "SESAM: A Biometric Person Identification System Using Sensor Fusion," Pattern Recognition Letters, Vol. 18, pp. 827-833, 1997. Y. Wang, T. Tan, and A. K. Jain, "Combining Face and Iris Biometrics for Identity Verification," Proc. of 4th Int'l Conf. on Audio- and Video-Based Biometric Person Authentication (AVBPA), Guildford, UK, June 9-11, 2003. S. Prabhakar, and A. K. Jain, "Decision-Level Fusion in Fingerprint Verification," Pattern Recognition, Vol. 35, pp. 861-874, 2002. J. Zhou, and D. Zhang, "Face Recognition by Combining Several Algorithms," Proc. of ICPR, pp. 497-500, 2002. T. Wark, S. Sridharan, and V. Chandran, "The Use of Temporal Speech and Lip Information for Multi-Modal Speaker Identification via Multi-Stream HMM's," Proc. of ICASSP, Vol. 6, pp. 2389-2392, 2000. M. Tistarelli, E. Grosso, "Active Face Recognition with a Hybrid Approach," Pattern Recognition Letters, Vol. 18, pp. 933-946, 1997. G. Valentini, F. Masulli, "Ensembles of Learning Machines." S. Kumar, J. Ghosh, M. M. Crawford, "Hierarchical Fusion of Multiple Classifier for Hyperspectral Data Analysis," Pattern Analysis & Application, pp. 210-220, 2002. T. Kam Ho, "The Random Subspace Method for Constructing Decision Forests," IEEE Trans. on PAMI, Vol. 20, No. 8, pp. 832-844, August 1998. Y. S. Huang, and C. Y. Suen, "A Method of Combining Multiple Experts for the Recognition of Unconstrained Handwritten Numerals," IEEE Trans. on PAMI, Vol. 17, No. 1, pp. 90-94, Jan. 1995. K. Woods, W. Philip Kegelmeyer Jr., and K. Bowyer, "Combination of Multiple Classifiers Using Local Accuracy Estimates," IEEE Trans. on PAMI, Vol. 19, No. 4, pp. 405-410, April, 1997. W. P. Kegelmeyer, and K. Bowyer, "Combination of Multiple Classifier Using Local Accuracy Estimates," IEEE Trans. on PAMI, Vol. 19, No. 4, pp. 405-410, 1997. P. Roli, and G. Giacinto, "Design of Multiple Classifier Systems," G. Giacinto, and F. Roli, "An Approach to the Automatic Design of Multiple Classifier Systems," Pattern Recognition Letters, Vol. 22, pp. 25-33, 2001. L. I. Kuncheva, "Switching Between Selection and Fusion in Combining Classifiers: An Experiment," IEEE Trans. on Systems, Man, and Cybernetics, Part B, Vol. 32, No. 2, April, 2002. X. Jing, D. Zhang, and J. Yang, "Face Recognition Based on A Group Decision-Making Combination Approach," Pattern Recognition, Vol. 36, pp. 1675-1678, 2003. L. Xu, A. Krzyzak, C. Y. Suen, "Method of Combining Multiple Classifiers and Their Applications to Handwriting Recognition," IEEE Trans. on System, Man, and Cybernetics, Vol. 22, No. 3, 418-435, 1992. T. Kam Ho, J. Hull, S. Srihari, "Decision Combination in Multiple Classifier Systems," IEEE Trans. on PAMI, Vol. 16, No.1, pp. 66-75, Jan. 1994. R. Brunelli, and D. Falavigna, "Person Identification Using Multiple Cues," IEEE Trans. on PAMI, Vol. 17, No. 10, pp. 955-966, 1995. X. Lu, A. Jain, "Resampling for Face Recognition," Proc. of AVBPA, 2003. J. Kittler, J. Matas, K. Jonsson, M. Sanchez, "Combining Evidence in Personal Identity Verification Systems," Pattern Recognition Letters, Vol. 18, pp. 845-852, 1997. S. B. Yacoub, Y. Abdeljaoud, and E. Mayoraz, "Fusion of Face and Speech Data for Person Identity Verification," IEEE Transactions on Neural Networks, pp. 1065-1074, Vol. 10, No. 5, September 1999. S. Ben-Yacoub, Y. Abdeljaoued, and E. Mayoraz, "Fusion of Face and Speech Data for Person Identity Verification," IDIAP-RR 99-03, 1999. J. Kitter, M. Hatef, R. P. Duin, and J. Matas, "On Combining Classifiers," IEEE Trans. on PAMI, pp. 226-239, Vol. 20, No. 3, March, 1998. L. I. Kuncheva, J. C. Bezdek, and R. P.W. Duin, "Decision Templates for Multiple Classifier Fusion: An Experimental Comparison," Pattern Recognition, Vol. 34, pp. 299-314, 2001. T. Hothorn, B. Lausen, "Double-Bagging: Combining Classifiers by Boostrap Aggregration," Pattern Recognition, Vol. 36, pp. 1303-1309, 2003. B. Duc, E. S. Bigun, J. Bigun, G. Maitre, and S. Fischer, "Fusion of audio and Video Information for Multi Modal Person Authentication," Pattern Recognition Letters, Vol. 18, pp. 835-843, 1997. D. Genoud, G. Gravier, F. Bimbot, and G. Chollet, "Combining Methods to Improve Speaker Verification Decision," IDIAP Research Report, 1996. J. Yang, J. Yang, D. Zhang, and J. Lu, "Feature Fusion: Parallel Strategy vs. Serial Strategy," Pattern Recognition, Vol. 36, pp. 1369-1381, 2003. L. Breiman, "Bagging Predictors," Technical Report, No. 421, Sep. 1994. L.I. Kuncheva, F. Roli, G.L. Marcialis, and C. A. Shipp, "Complexity of Data Subsets Generated by the Random Subspace Method: An Experimental Investigation," Lecture Notes in Computer Science, 2001. F. Roli, G. Giacinto, and G. Vernazza, "Methods for Designing Multiple Classifier Systems," Proc. of the Second International Conference on Multiple Classifier Systems, 2001. G. Giacinto, and F. Roli, "Dynamic Classifier Selection," Proc. of the First International Conference on Multiple Classifier Systems, 2000. J. Kittler, "A Framework for Classifier Fusion: Is it Still Needed?", 2000. T. Kam Ho, "Data Complexity Analysis for Classifier Combination," Proc. of the Second International Conference on Multiple Classifier System, 2001. M. Skurichina, L. I. Kuncheva, and R.P.W. Duin, "Bagging and Boosting for the Nearest Mean Classifier: Effects of Sample Size on Diversity and Accuracy," Proc. of the Third International Conference on Multiple Classifier System, 2002. M. Skurichina, L. I. Kuncheva, and R.P.W. Duin, "Bagging, Boosting, and the Random Subspace Method for Linear Classifier," Pattern Analysis and Applications, 2002. D. Partridge, and W. B. Yates, "Engineering Multiversion Neural-Net Systems," Neural Computation Vol. 8, pp. 869-893, 1996.